Oct 15, 2024

Navigating AI in your Infrastructure: Dos, Don'ts, and Why It Matters

Article Series

0 min read

Share:

Introduction

GenAI is everywhere. But very often, the cool and exciting demos don’t work the same way in production. And even worse, some generated content might open the door to security risks. Here's "The Dos and Don'ts with AI and Your Infra Nowadays" and why it matters.

1. Don't blindly generate your IaC code

Code generation with AI is just amazing. I practically use GPT (or cursor AI) everyday. But let's be real: IaC code generation isn't mature enough to be 100% trusted in prod.

1.1 First of all, how does GenAI work?

At its core, a Large Language Model (LLM) like GPT-4 is a probability model. It works by encoding text—including code—into a latent space (imagine a high-dimensional space where similar pieces of text are closer together). Then it generates code by decoding from that space based on statistical probabilities. Essentially, it predicts the next word (or token) in a sequence by considering the context.

Example with Python:

Let's say the model has been trained extensively on Python code. If you prompt it with:

The model predicts the next token based on probabilities learned during training. It knows that after return, in the context of a function adding two numbers, the next likely tokens are a + b.

So it generates:

Here's the step-by-step token prediction:

At each step, the model selects the most probable next token based on the context so far. Similarly, if you ask it to write a function to multiply two numbers:

Prompt: "Write a function to multiply two numbers in Python."

Generated Code:

Again, the model uses learned probabilities to generate each token, producing correct and functional code because it's seen lots of similar examples during training.

Example with Terraform:

Now, suppose the model has seen very few examples of advanced Terraform configurations for AWS VPC peering. If you prompt it to generate a Terraform script for VPC peering with specific settings, it might produce incomplete or incorrect code because the latent space representation for this niche is sparse.

Here's what you might get:

What's missing or wrong:

Missing auto_accept parameter: Without this, the peering connection might not be established automatically.

No tags or additional configurations: Important metadata and route settings are absent.

Potentially incorrect IDs: Using placeholder VPC IDs without context can lead to errors.

In contrast, here's how a correct configuration might look:

As you can see, the correct configuration includes route settings and proper references, which the AI might miss due to limited training data in this area.

1.2 But here's the catch for code quality

LLMs are only as good as the data they're trained on. Terraform and IaC tools are relatively new (about 10 years old). That means the dataset the model was trained on (mostly from GitHub) is sparse. But most of all, most companies don't put their infra code on GitHub for security reasons. So the encoding space for this kind of code is sparse, making it harder for the model to generalize.

Another Angle:

Imagine encoding two similar resources in Terraform. Due to the sparse data, when decoding, the model might mix things up, leave out critical parts, or extrapolate content from the original prompt (now, it’s more about framing the right question than coding the answer!) . It wouldn't have done that in a language like Python, where there's tons more data to learn from.

As the saying goes, "garbage in, garbage out." In this case: "small dataset in, lower accuracy out."

1.3 Even worse, it can cause some security issues

Let's recap. Most LLMs were trained on GitHub with a maximum of 10 years of data with very few open IaC repositories —and even worse, with repos that might contain potential attacks vectors.

Example: An attacker might have uploaded Terraform code with security flaws, like open security groups. The LLM, trained on this code, could generate similar insecure code for you.

For instance:

Why This Is Dangerous:

Opens All Ports to the World: This could expose your servers to attacks.

Hard to Spot if You're Not Careful: If you trust the AI output blindly, you might miss this.

When it comes to your infra—the core of your cloud—such vulnerabilities are even more dangerous.

2. Nevertheless, it's improving

But things are getting better. Improvements, made day by day, let us hope than IaC code generation will considerably improve in the near future.

2.1 Bigger models generating synthetic datasets for smaller models

Big models can generate better outputs. We use them to create synthetic datasets to make the input data for smaller models "denser," so they perform better.

Example: Using a big model to generate various secure Terraform configurations, filling in the gaps where real data is sparse. This helps the smaller models learn better patterns.

2.2 Positively biased datasets

These are datasets improved on purpose by curating and including only best practices.

How It Works:

Filtering Out Bad Examples: Removing insecure or bad code from the training data.

Adding Good Examples: Including well-written, secure code snippets.

Why It Works: The model learns from higher-quality data, reducing the chance of generating insecure or low-quality code.

2.3 The future, contextual AI?

While current AI models have limitations, the future looks promising with the development of what we can call Contextual AI. This approach involves models that understand and incorporate the specific context of your environment. For information retrieval, you can see it as an equivalent to RAG (Retrieval-Augmented Generation).

Highlights:

Integration with your infra: Future AI could tailor code to your specific setup by accessing real-time information about your infrastructure and organizational standards.

Policy-Aware Generation: By understanding your security policies and compliance requirements, Contextual AI can generate IaC that adheres to your rules, reducing the risk of insecure configurations.

3. But "Synthesis AI" is there

We've just seen that code generation can be tricky (even if the next 5 to 1 year may surprise us a lot).

3.1 Synthesis AI vs Generative AI

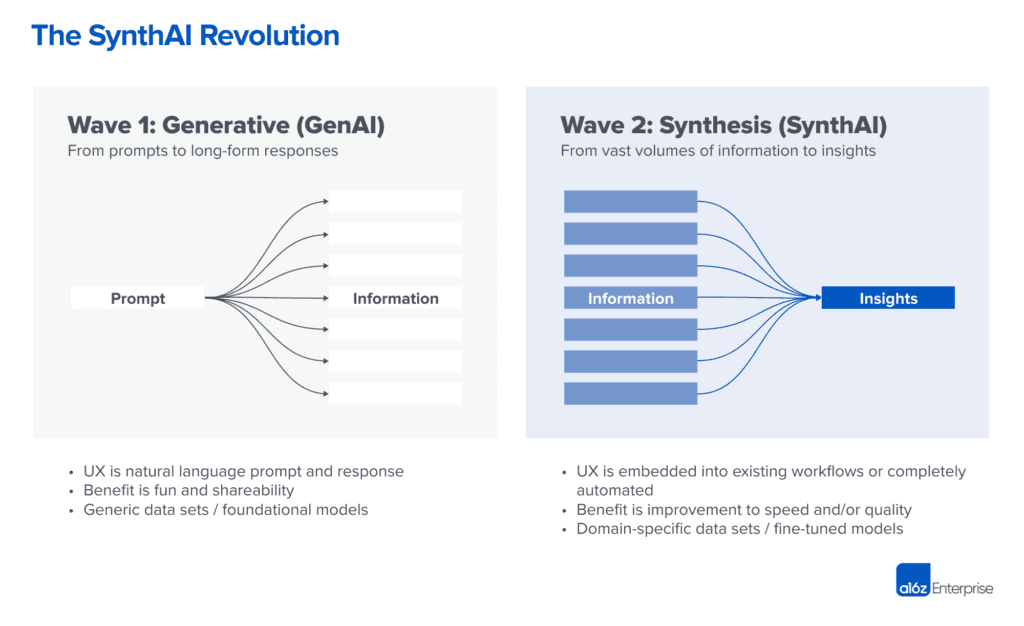

A super cool article from A16Z (link) introduced the notion of "Synthesis AI." According to them, we're moving from Wave 1 of AI that generates more content to Wave 2 of AI that helps us by synthesizing information—showing us less but more meaningful content.

Why This Matters:

Quality Over Quantity: In B2B settings, we care about making better decisions faster, not wading through more content.

AI as an Assistant: Helps us understand complex info, like logs or infra topology, rather than generating new code.

3.2 Practical applications

3.2.1 Reading logs

“Synthesis AI” is already revolutionizing how we read and interpret logs. By highlighting critical information and patterns, it helps us quickly identify issues and understand system behavior without wading through endless lines of log data.

Why This Matters:

Proactive Issue Resolution: By automatically detecting anomalies and performance issues, teams can address problems before they impact users, reducing downtime and maintaining service reliability.

Efficiency Gains: AI-powered log analysis minimizes the time engineers spend manually parsing logs, allowing them to focus on more strategic initiatives.

3.2.2 Understanding your infra's topology

When it comes to grasping your infrastructure's topology, the challenge isn't about the number of lines of code but understanding the broader implications of your configurations. It's about how components like VPCs are set up and how they interact. The complexity lies in the deep understanding required to navigate a volatile or sensitive environment—where even a small misconfiguration can lead to significant issues.

3.3 The importance of complementary, deterministic approaches

While AI tools like “Synthesis AI” offer valuable assistance, it's crucial to complement them with deterministic methods, such as accurately mapping your infrastructure. These approaches ensure that your infrastructure is intentionally designed and well-understood, reducing the risk of misconfigurations and security vulnerabilities.

Key Points:

Deterministic Mapping Is Essential: Structured maps of your infrastructure enable better decision-making and highlight potential issues before they become critical.

Avoiding Oversimplification: Relying solely on AI can oversimplify complex systems. Deterministic methods maintain the necessary depth of understanding.

Data Accessibility and Structure: Making infrastructure data queryable in a structured way allows for more effective analysis and management

Conclusion

In the end, even if IaC code generation isn't quite there yet, improvements are on the horizon. We must remain cautious, always reviewing AI-generated code and relying on deterministic tools to add that extra layer of security. Use AI as a tool to assist you, not replace you—especially when it comes to your infra.

Cheers! From Roxane @ Anyshift (ex AI-researcher)